Have you ever wondered how AI can take a long piece of text and turn it into a short, clear summary or rephrase it without changing the meaning? That’s the magic of generative AI in NLP (natural language processing). These AI tools are getting smarter every day, but they still face some common challenges. Let’s dive deeper into how this works and what hurdles come along when AI tackles tasks like text summarization and paraphrasing.

How Generative AI Understands Language

Generative AI in NLP uses large language models (LLMs) to understand and process human language in a way that feels natural. These language models, powered by artificial intelligence, rely on machine learning techniques like deep learning and neural networks. By training on vast amounts of data, they can learn patterns in human language and generate new, coherent, and contextually relevant text. For instance, tools like generative pre-trained transformers (GPTs) are used in nlp models to handle tasks like language translation, sentiment analysis, and even content creation.

One of the key features of generative AI in NLP is its ability to generate human-like text that feels natural in response to user queries. These llms can create new responses, making them useful for applications like virtual assistants or chatbots. Thanks to their ability to generate text, they can assist with various tasks like sentiment analysis, content creation, and language translation. The algorithm behind pre-trained transformers allows them to understand context and produce coherent and contextually relevant replies, making them highly efficient in answering user queries or helping with complex tasks.

Popular Applications of Generative AI in NLP

The role of generative AI in NLP has grown significantly, helping with various applications in ai and natural language processing. One popular use is in chatbots and virtual assistants, which rely on generative AI models to create responses that are coherent and contextually relevant. By processing large amounts of language data, these systems can help users with queries, breaking language barriers and enabling better communication from one language to another. Generative AI and NLP work together to improve nlp tasks like language generation, making the technology more efficient than traditional AI approaches.

Another area where generative AI in NLP plays a key role is in translation and nlp systems. Generative AI aids in improving language understanding and enhances machine learning models by using deep learning techniques. Unlike traditional AI, generative AI is more flexible in adapting to different contexts. However, there are still some limitations of generative AI, such as challenges in handling highly complex language or ensuring perfect accuracy. Here are a few common applications of generative AI and natural language:

- Chatbots and virtual assistants for answering user queries.

- Language translation to help overcome language barriers.

- Content creation by generating new and relevant text.

- Processing language more efficiently in nlp tasks.

- Integrate generative AI with other AI systems for advanced solutions.

The Role of GPT in Advancing Natural Language Processing

Generative AI in NLP has greatly advanced with the help of Generative Pre-trained Transformers (GPT). These models are a type of AI that specialize in both language understanding and generation, making them highly effective in understanding and processing language. GPT is one of the most significant generative AI technologies used in the field of AI, helping natural language processing models generate human-like language. It plays a major role in breaking language barriers in global communication and providing smart AI services. By utilizing supervised learning, GPT improves language models to deliver contextually relevant responses, enhancing the efficiency of generative AI in NLP.

NLP Uses GPT for Language Tasks

NLP uses GPT to perform a variety of tasks, from answering user queries to generating high-quality text. These models make nlp tools more powerful by enabling them to understand and produce natural language effortlessly. NLP allows AI to improve areas like language generation and translation.

Generative AI vs Traditional Methods

Unlike older methods, generative AI often produces more flexible and dynamic responses. Generative AI vs traditional methods shows how generative AI is a type that can learn context and meaning better. This makes tools like GPT valuable for applications in various AI services.

NLP Can Help Break Language Barriers

Using generative AI in NLP, tools like GPT have made it easier to break down language barriers by generating accurate and coherent text in multiple languages. This form of AI ensures nlp provides better interaction for users across different cultures, making communication more efficient.

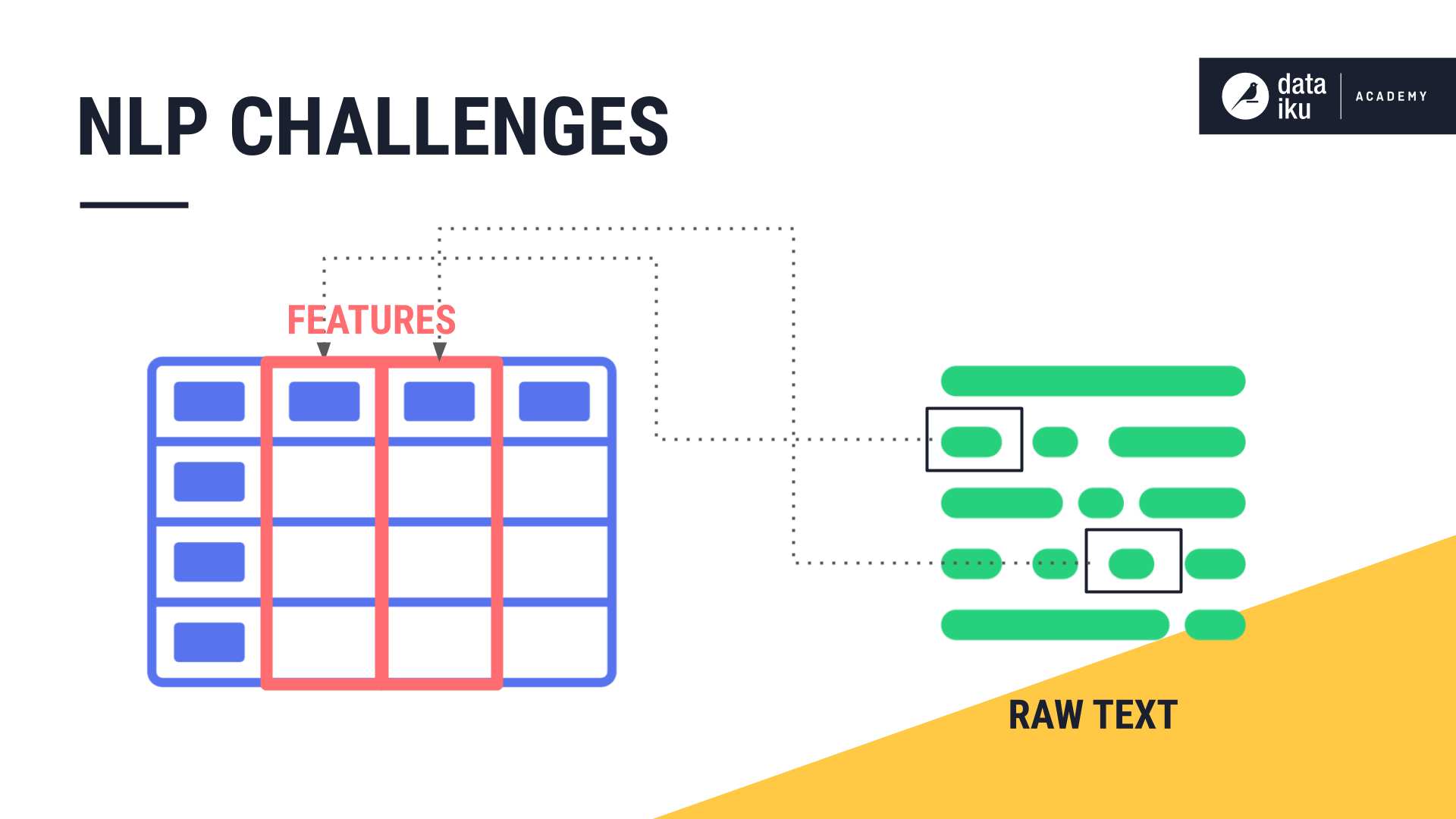

What are some common challenges when applying generative AI to NLP tasks like text summarization and paraphrasing?

Generative AI has transformed NLP tasks, making them faster and more efficient. However, it still faces a few challenges when it comes to ensuring quality and accuracy. Let’s explore some of these difficulties below.

- Loss of Key Information: One of the biggest challenges is that AI sometimes struggles to include all the important details when summarizing a text. In text summarization, it can miss out on crucial information, leading to incomplete summaries. As Julie, a Digital PR Specialist at AI Fire, points out, “Common challenges include ensuring the summaries are accurate and complete, as AI sometimes misses key details.” Julie also notes that AI might oversimplify or even change the meaning during paraphrasing, which can be risky for important content. Human review is often needed to ensure the AI’s work is correct and nothing significant is left out.

- Maintaining Context: When AI paraphrases a sentence, it often changes the original meaning or oversimplifies the content. This is especially risky when dealing with sensitive or complex topics. As Julie, Digital PR Specialist at AI Fire, mentions,

“Common challenges include ensuring the summaries are accurate and complete, as AI sometimes misses key details. There’s also a risk that the AI may oversimplify or change the meaning when paraphrasing. Human review is often needed to check the AI’s work, especially for important content.”Here are the links from our site that you can read more about and use as references for your post: https://www.aifire.co/p/

chatgpt-update-gpt-4o-the-ai– that-talks-and-understands- emotions making it essential to double-check the AI’s output, especially when accuracy is crucial.

- Technical Language Barriers: Another challenge is handling technical jargon or industry-specific terms. Generative AI may not always understand the exact meaning of certain technical words, which can affect the quality of summaries or paraphrased texts. Here is what Andrew Adams, CEO & Founder of Vluchten Volgen, shares: “In aerospace, challenges with AI in NLP tasks include understanding technical jargon and ensuring precision in summaries. It’s vital that the information is both clear and exact, given the complexities involved.” According to Andrew, precision is key when working with technical content, especially in industries like aerospace, where even a small mistake can lead to serious consequences. The AI must understand these terms to provide summaries that are both clear and accurate.

- Bias in AI Models: AI models can unintentionally introduce bias into the output. For example, if the AI has been trained on biased data, it may reflect that bias when summarizing or paraphrasing content. This can result in summaries that favor certain viewpoints or ignore important details.

- Need for Human Review: AI is not perfect, and human review is often required, especially for important or sensitive content. As Andrew and Julie both highlight, while AI can be fast and efficient, human oversight is still needed to ensure that the AI’s work is accurate and doesn’t miss any key points.

Generative AI and the Future of Content Creation

Generative AI in NLP is revolutionizing the field of AI, especially in content creation. By leveraging the power of generative AI, businesses and creators can generate human language that is both natural and contextually relevant. Generative AI uses nlp algorithms to interpret the structures of language, enabling machines to create high-quality, human-like language. The future of content creation will heavily rely on tools like genai and NLP, which can help in efficiently producing large volumes of text by processing large amounts of data. With nlp and llm, AI can now better understand and respond to user queries.

In the world of AI, language models are supervised learning algorithms that are trained to generate coherent text and new content. These models are supervised learning algorithms that can combine the learning from two or more data sets, improving the AI’s ability to interpret language. This makes modern nlp a subset of NLP technologies that constantly evolve. Generative AI often outperforms traditional methods, making it the future of many applications of NLP, such as automating content creation, generating reports, and creating personalized user experiences.