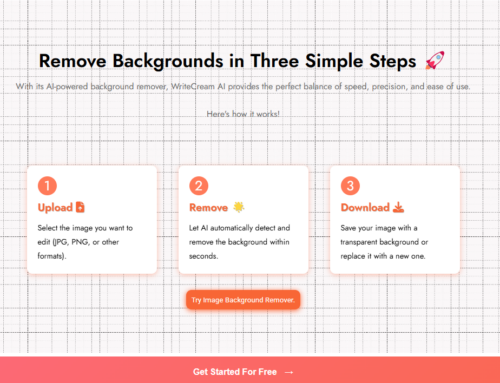

Have you ever wondered if AI can actually debate like a human? We use AI for so many things today- answering questions, writing content, and even making financial decisions. But can AI hold a real debate, where it understands emotions, context, and complex viewpoints? Let’s dive into this fascinating topic!

The Growing AI Debate

The AI debate is heating up like never before. In 2023, a well-known debater at a tech conference asked the audience, “I think we need to ask ourselves- how much control should we give to AI?” This sparked a lively discussion on how AI algorithms and machine learning are shaping the world. From AI chatbots like ChatGPT to generative AI creating art, the use of AI is growing every day. Some believe that AI technologies will automate jobs and boost efficiency, while others fear that Artificial Intelligence bias and over-reliance on AI models and tools could create new problems.

In 2024 – 2025, the global AI industry is expanding fast, with AI companies launching new tools on platforms like the App Store. The use of AI in the U.S. and beyond is changing everything- from customer service chatbots to AI robots helping in factories. But concerns remain. Can AI truly guide AI? Can we trust Openai and other leaders in AI development to keep things ethical? As more businesses look to use AI for automation, it’s clear that this debate is far from over, especially as tools like Invoice Templates for MS Excel become increasingly popular in streamlining operations.

Can AI Replace Human Intelligence?

The AI debate has now shifted to a big question- Can AI replace human intelligence? Some tech leaders, including Elon Musk, believe that artificial general intelligence could surpass human thinking by 2030. But is that really possible? AI can process massive amounts of training data, but it still has limited knowledge beyond what it is trained on. At a recent Stanford University discussion, experts pointed out that while AI is used for automation, decision-making, and even creative tasks, it lacks true human understanding.

AI is also raising concerns about AI safety and security. Imagine AI generating fake news to impersonate real people or spreading disinformation online. Even President Joe Biden has spoken about the need to mitigate the risks of AI. To ensure ethical implementation, experts suggest:

- Using explainable AI so decisions are clear and understandable

- Developing transparent AI to reduce bias in AI software

- Strengthening data privacy and security to protect users

- Integrating AI responsibly to prevent loss of human jobs in the workforce

- Focusing on robotics for assistance rather than full automation

As companies like DeepSeek work on developing AI, many wonder—will AI be our greatest tool or our biggest challenge? One thing is clear: this technology could change everything, but humans must stay in control.

Can Conversational AI Learn to Debate with Humans on Complex Topics?

AI can process huge amounts of information in seconds. It can analyze arguments, present facts, and even counter opinions. But can it truly engage in a deep and meaningful debate? AI lacks emotions and real-world experiences, which are important in discussions.

Here is what V. Frank Sondors, founder of Salesforge.ai, says about this:

“Conversational AI has the potential to engage in debates but there are many challenges. AI can process large volumes of information and generate responses, but understanding the nuances of human emotion, tone, and context in complex topics remains a struggle. AI lacks true comprehension, making it difficult for the system to engage in a debate that goes beyond surface-level arguments.”

This means AI can be helpful in debates but may struggle with understanding the depth of human conversation.

What Challenges Does AI Face When Dealing with Opposing Viewpoints?

One of the biggest challenges AI faces in debates is handling opposing viewpoints effectively. A good debate requires balance—it’s not just about presenting facts but also about considering emotions, values, and experiences. AI might struggle with this because it doesn’t have personal experiences or emotions.

Here’s what V. Frank Sondors further explains:

“When AI encounters opposing viewpoints, the real challenge is its ability to maintain a neutral stance while effectively considering both sides of the argument. This is something that AI agents like ours at Salesforge.ai are continuously working to improve, but human nuance, empathy, and reasoning are still key aspects that AI can’t replicate fully.”

So, while AI can present both sides of an argument, it may not fully grasp the emotional depth or ethical dilemmas behind those viewpoints.

Could Debating AI Become a Tool for Resolving Disputes or Conflicts?

Imagine if AI could be used to help settle arguments between people. Could AI act as a neutral mediator, presenting different perspectives and helping people find common ground? AI could help by analyzing past conflicts, providing logical solutions, and ensuring fair discussions.

Kevin Shahnazari, Founder & CEO of FinlyWealth, shares his insights:

“AI debate capabilities have a real-world impact on decision-making. When we introduced an AI system to help users evaluate credit card options, it successfully managed two-way discussions about spending habits and financial goals. By presenting alternative viewpoints and helping users challenge their own assumptions about money management, the system achieved an 85% user satisfaction rate.”

However, AI struggles with emotions, which is a key part of resolving disputes. Kevin further explains:

“The biggest challenge I’ve observed with AI debates lies in handling emotional context. During our beta testing of financial advisory chatbots, we found that AI systems struggled to recognize when users needed emotional reassurance versus factual information. We solved this by programming our AI to acknowledge emotional cues and adjust its debate style accordingly – a feature that increased user trust by 40%.”

This suggests that AI can be a helpful tool in discussions, but human involvement is still necessary when emotions play a big role in decision-making.

Should Artificial Intelligence Have Rights Like Humans?

The AI debate is now moving into an unusual but important question- Should AI have rights like humans? Some believe that AI, especially advanced systems like GPT, is evolving beyond just a tool. With its ability to provide personalized responses, assist in problem-solving, and analyze huge amounts of data, AI is becoming more involved in human decision-making. But does that mean it deserves rights? Many experts argue that AI lacks emotions, consciousness, and moral responsibility, making this idea unrealistic. Others worry that giving AI rights could lead to serious socio-economic problems, including job losses and even legal confusion.

There are also concerns about AI’s potential harm, especially in areas like facial recognition technology. Police departments and government agencies rely on it, but studies show it can be racially biased and disproportionately affect certain communities. Lawmaker groups worldwide are debating whether AI should be held accountable under laws like human rights laws. In response, many organizations need to focus on AI ethics, cybersecurity, and data protection. Even the Chinese government has started regulating AI to prevent misuse. An open letter from experts calls for stricter rules on ai-generated voices, health data, and personal data to address ai-related risks. The big question remains- can we truly control AI before our overreliance on it becomes a bigger problem?