Have you ever wondered how much energy it takes to run AI systems like GPT-4, which power some of the most advanced applications we use today? The AI field is rapidly expanding, but there’s one big challenge it faces: AI power consumption. With the high demands of AI, tech experts are looking for ways to power AI models efficiently and sustainably. Let’s explore how much energy these models consume, the emerging technologies aiming to make AI greener, and if AI can help improve its own efficiency.

Understanding AI’s Electricity Demand

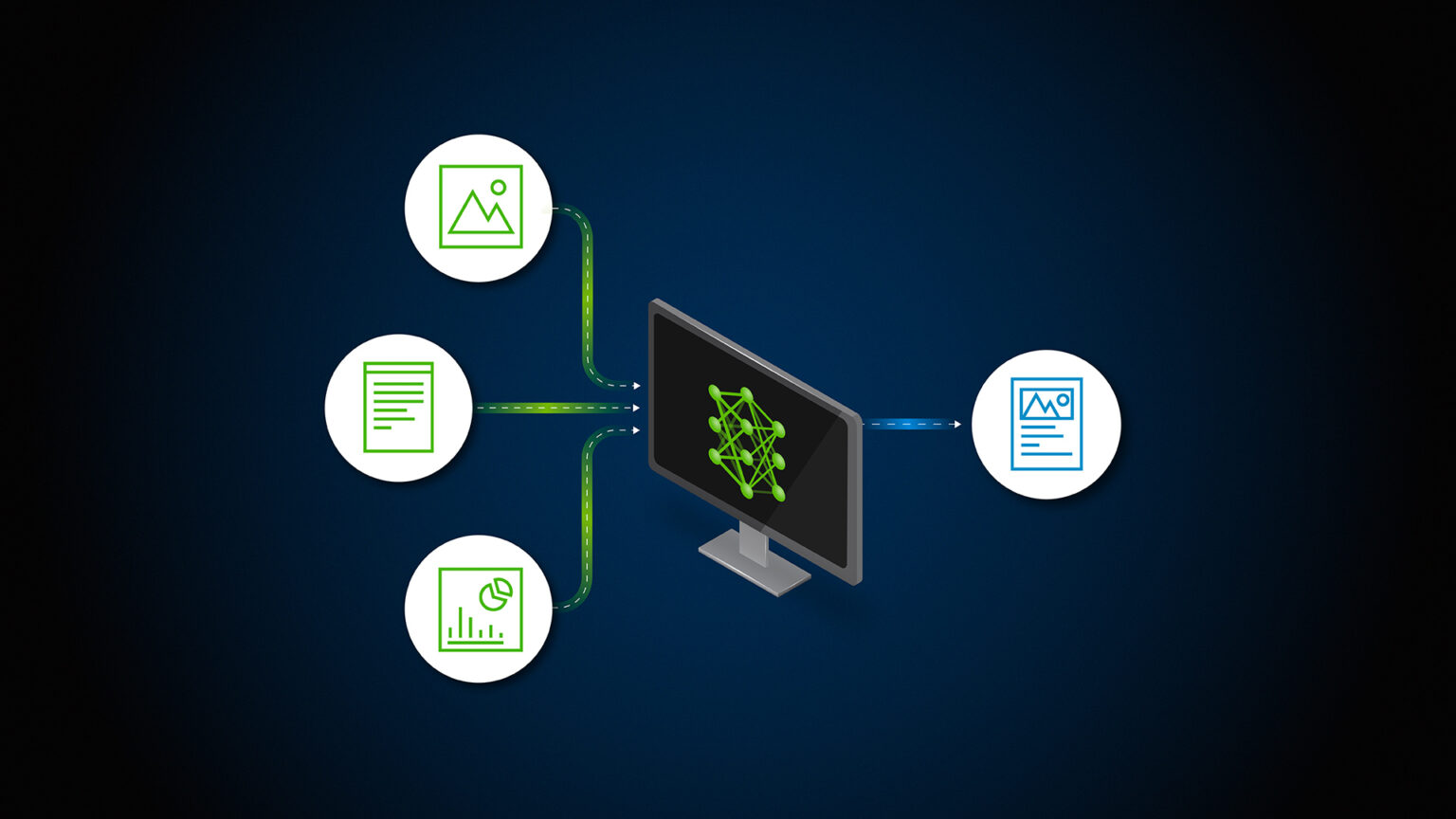

AI power consumption is higher than ever before, and it’s no surprise, considering the vast amounts of data it processes. Every time we use generative AI tools like ChatGPT or do a simple Google search, these systems rely on huge data centers to respond instantly. These data centers are constantly running, leading to massive electricity use, which in turn increases energy consumption.

The large language models that power AI are designed to handle enormous amounts of information, making their power consumption quite high. The International Energy Agency has highlighted that the electricity demand of AI, especially from large models, is continuously rising. As AI tools become more advanced, they need more electricity, leading to an increase in energy demand.

Artificial intelligence requires constant energy use to stay operational, especially for complex tasks in language models. This electricity consumption adds up significantly as people use AI models more frequently, raising concerns about emission levels. Some companies are exploring renewable energy sources to offset AI’s high power consumption, but it’s a challenge to balance efficiency with sustainability.

AI’s energy consumption also directly impacts the environment. With each use of generative AI, the need for electricity increases, which could add to emission concerns if not managed sustainably. Balancing AI’s electricity use with cleaner energy options is crucial for the future, especially as our dependency on AI models like ChatGPT grows.

How Much Energy Is Consumed by Training Large AI Models Like GPT and GPT-4?

The power needs of large AI models like GPT-4 are truly staggering. Training these models involves processing massive amounts of data, which requires a lot of computational power. This results in high electricity usage and, consequently, higher carbon emissions.

To give you a perspective, here’s what Loris Petro, Marketing Strategy Lead and Digital Marketing Manager at Kratom Earth, has to say:

“Training models like GPT-4 uses an enormous amount of energy, equivalent to the lifetime emissions of several cars. It’s estimated that GPT-4 training alone consumed enough electricity to power over 1,400 homes for a month. I’ve spoken with friends in the tech industry who are shocked by how much power these systems require. It’s clear that as AI expands, energy consumption is going to be a major factor we need to address.”

Similarly, Tracie Crites, Chief Marketing Officer of HEAVY Equipment Appraisal, shares,

“Training large AI models like GPT-4 consumes a staggering amount of energy. It’s estimated that training GPT-4 required between 51,773 MWh and 62,319 MWh of energy. To put that into perspective, that’s equivalent to the annual electricity consumption of thousands of average American homes. This high energy demand is due to the complex computations and the vast amount of data these models process.”

What Technologies Are Emerging to Power AI Infrastructure More Sustainably?

As energy consumption rises, companies and researchers are exploring sustainable solutions for powering AI systems. Here are some of the latest technologies being developed to make AI greener:

- Model Compression and Edge Computing: According to Loris Petro,

“Technologies like model compression and edge computing are helping reduce the energy demands of AI. Model compression makes models smaller and more efficient, while edge computing allows data to be processed closer to the source, reducing reliance on large data centers. Neuromorphic computing, which mimics the brain’s efficiency, is another exciting development. I’ve seen companies already testing these methods, and the results are promising for both sustainability and cost savings.” - Liquid Cooling Systems: This method is becoming a popular way to manage energy usage in data centers. As Tracie Crites explains,

“One of the main areas of development is in liquid cooling systems, which are far more efficient at heat dissipation than traditional air cooling. Liquid cooling allows data centers to run high-performance workloads while significantly reducing their energy usage. “

These advancements are promising steps toward reducing the energy consumption of AI models, especially as their use continues to grow.

Can AI Improve Its Own Energy Efficiency Through Model Pruning or Quantization Techniques?

AI is also helping improve its own efficiency through techniques like model pruning and quantization.

Model Pruning involves removing redundant parts of the model, which lightens the computational load. Quantization, on the other hand, reduces the precision level of data, making computations less energy-intensive.

Here’s how Loris Petro describes these methods:

“Yes, AI can become more energy-efficient through methods like model pruning, which eliminates unnecessary data, and quantization, which reduces data precision where it’s not needed. These techniques make models run faster and use less power without affecting performance. I’ve heard from colleagues that this approach not only cuts energy costs but also improves speed. As AI systems grow, these strategies will be key to keeping them sustainable.”

Tracie Crites further adds,

“AI can improve its energy efficiency through techniques like model pruning and quantization. Model pruning involves removing unnecessary parameters from a neural network, which reduces the computational load without significantly affecting performance.

Quantization, on the other hand, reduces the precision of the numbers used in computations, which can drastically lower the energy required for processing. For example, using 8-bit integers instead of 32-bit floating-point numbers can cut energy consumption by up to 4 times. These techniques help make AI models more efficient and less power-hungry.”

Both experts agree that these techniques can help lower power usage, making AI systems leaner and more sustainable.

How Can Nuclear Power Be Utilized to Meet the Growing Energy Demands of AI Research?

As AI grows, reliable energy sources become more important. While renewable sources are great, they are not always stable. Here’s where nuclear power comes in as a possible solution for powering energy-intensive AI research.

Loris Petro believes nuclear energy could play a big role:

“Nuclear power provides a stable, carbon-free energy source that’s well-suited for the continuous power needs of AI research. Companies like Microsoft are exploring nuclear energy to reduce their reliance on fossil fuels while ensuring consistent power for AI operations. A friend in tech shared how their company is looking into similar solutions to manage rising energy costs. If more companies adopt nuclear power, it could reshape how we power AI and other energy-intensive industries.”

Tracie Crites shares a similar view:

“Nuclear power offers a stable and high-output energy source that could effectively meet the increasing demands of AI research. Unlike renewables, which can be intermittent, nuclear energy provides a continuous power supply, which is ideal for data centers that need to operate 24/7. Integrating nuclear energy with AI infrastructure could help lower the carbon footprint associated with training large models, as nuclear power is a zero-emission energy“

Nuclear energy’s stability and low emissions make it a viable option to consider for supporting AI research, especially as power needs keep climbing.

The Environmental Cost of Training AI Models

AI power consumption has a significant environmental impact, as training and operating AI models requires a lot of energy. AI systems already put an enormous strain on the power grid, with AI data centers demanding high amounts of electricity to run. Due to the data center power demand, the global energy cost linked to AI continues to rise, putting pressure on the environment and energy resources.

Using AI requires a lot of computing power, which further drives up the energy needed. Here’s how AI power consumption is creating environmental challenges:

- Demand Growth: AI demand growth is adding to global electricity consumption, making it harder to achieve energy efficiency targets.

- Energy Intensive: The energy-intensive nature of AI training means data centers may need times more energy than typical IT infrastructure.

- Energy Cost and Crisis: As AI data centers expand, they contribute to the energy crisis by increasing global energy costs.

- Renewable Energy Transition: Some AI researchers are pushing for a transition to renewable energy to help reduce emissions caused by AI’s power usage.

With the growing demand from AI, there is a pressing need to consider energy-efficient alternatives. If AI continues on this trajectory without changes, data centers may face even higher energy costs and power demand in the near future.

Efforts to Make AI Greener and Energy-Efficient

With AI power consumption on the rise, there’s a strong push to develop methods that make AI greener and more energy-efficient. AI training requires an enormous amount of data, and the energy consumption associated with this is equivalent to the annual power usage of small countries. As AI applications grow, the demand for energy is climbing, too, and the increase in energy for training generative AI models contributes to this significantly.

Using Less Energy for Data Storage

One area to focus on is data storage, as AI requires vast amounts of data. Improving data storage methods to save energy can help reduce the total energy consumption associated with AI. Innovations that enable generative AI tools and models to use less energy could also reduce the power requirements needed to deploy AI models effectively.

Developing Energy-Efficient AI Systems

Efforts are being made to create generative AI systems that consume less energy. By optimizing AI models and working on AI’s efficiency, developers are aiming for a reduction in the footprint of AI. Advanced techniques help these models achieve lower power consumption, potentially addressing the consumption associated with data centers. Meeting the U.S. power demand growth while saving energy will be crucial as the AI race intensifies.

Exploring Renewable Sources of Energy

Many companies are now looking at renewable sources of energy to power their AI infrastructure. Transitioning to renewables can mitigate the environmental impact of AI’s annual energy consumption. This shift helps offset the footprint of AI power consumption, and as generative AI tools become a part of our daily lives, finding sustainable energy solutions becomes essential to manage power demands efficiently.

The Future of AI Power Consumption

As AI power consumption continues to rise, so does the need to find efficient ways to manage it. AI is also driving the construction of new data centers worldwide, which are essential to support data-heavy processes. However, with this data center growth, we’re seeing increased energy needs tied to data processing. The goal now is to make AI more efficient so that it consumes less power without compromising performance.

Key Trends and Solutions

- Optimizing GPU Power: GPUs are crucial for AI’s energy needs, but they consume a lot of energy. New GPU designs aim to deliver the same power with much less energy.

- Utilizing Alternative Energy Sources: Shifting to sustainable energy sources can offset AI power consumption. Solar, wind, and other renewables can support data center power use, helping reduce the environmental impact.

- Energy-Efficient Data Centers: Many companies are focusing on building data centers that require little energy to operate. By investing in energy-efficient technology, data centers can minimize the energy consumed by data centers while still supporting AI’s demands.

- Managing Power Needs: As AI grows, so does the power tied to data. Solutions like power regulation and smarter tech designs help balance global data demands and technology’s energy impact.

These solutions highlight how AI’s energy needs are evolving. As we address AI power consumption, future technologies will play a key role in reducing the environmental footprint while meeting AI’s ever-growing demands.